Action localization with movemes

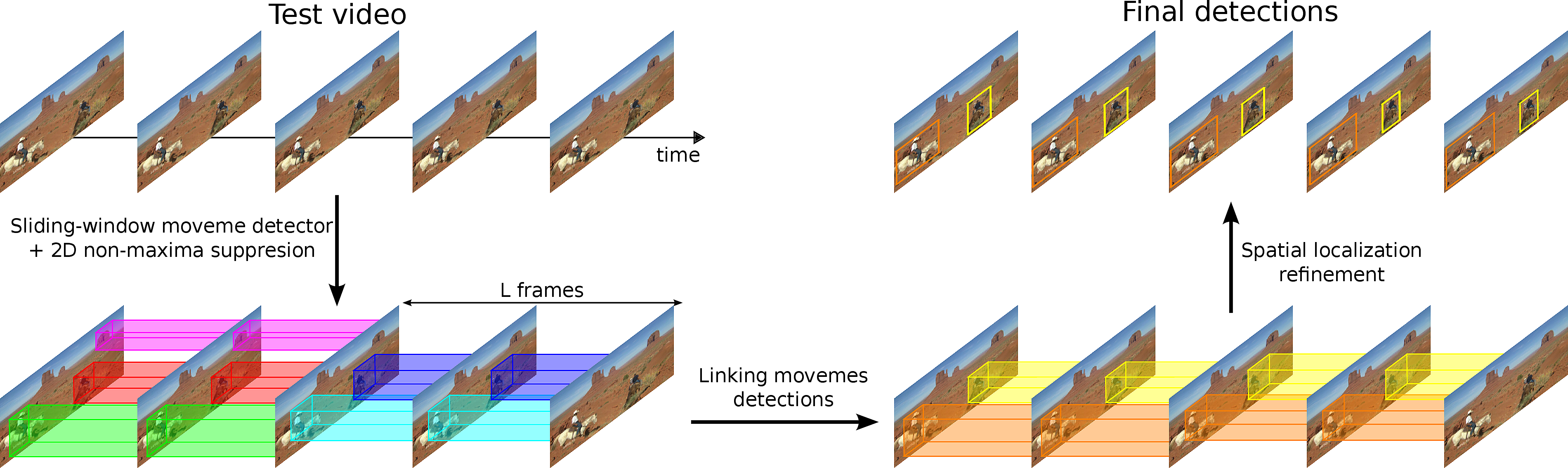

We propose a two-stage approach for action localization using both shape and motion cues. Our approach learns flexible spatio-temporal templates, which consist of sequences of spatio-temporal cuboids, called movemes. Movemes leverage the expressiveness of state-of-the-art features such as HOG, HOF and MBH to obtain a coarse spatial and fine temporal representation. In a first stage, we detect candidate movemes and perform temporal linking in order to discard temporally-inconsistent ones and to obtainspatio-temporal action detections. In a second stage, these action detections are improved by a spatial localization refinement using clustered HOGs with linear SVMs.The proposed approach outperforms other methods on the challenging UCF sports dataset, in terms of mean per- frame localization, and true/false detections. We also propose an evaluation protocol for localization using average- precision, as in object detection challenges. Furthermore, we test our method on the HighFive dataset, that consists of daily interaction activities.

Archetypal Analysis of large collection of images

For large image collection visualization, we proposed a robust generalization of

archetypal analysis, along with a fast optimization algorithm that allows to

scale to web-scale image collections. Archetypal analysis

is an unsupervised learning technique, which is related

to successful data analysis methods such as sparse coding and non-negative matrix factorization.

Robust archetypal analysis learns a

factorial representation of the data, and uncovers so-called archetypes that are

sparse convex combinations of data-points. The proposed approach yields

state-of-the-art results for codebook learning, and provides intuitive and

easy-to-visualize representations of huge collections of natural images. See examples and code here.

Object localization with minimal supervision

For object localization from images, we proposed a two-step approach that requires minimal supervision. For each image, supervision only tells whether the object is present or not, but not where. First, we compute a submodular cover that allows to single out a set of positive object windows. Second, we use smoothed latent-SVM in order to simultaneously classify and localize the objects. The proposed approach gives a 50\% relative improvement in mean average precision over the current state-of-the-art on PASCAL VOC 2007 detection. See paper here and code here.Summary of 2014 (and upcoming events in 2015)

- Ross Girschick is going to visit the LEAR team of Inria in January 2015.

- Mattis Paulin, Philippe Weinzaepfel, Zaid Harchaoui, are going to visit J. Malik's team in UC Berkeley for two weeks in December 2014.

Summary of 2013

- Zaid Harchaoui and Julien Mairal (LEAR-Inria) visited Jitendra Malik, Bin Yu, Nourredine El Karoui (UC Berkeley) for two weeks in December 2013.

- Georgia Gkioxari, PhD student of J. Malik (UC Berkeley), has done a two-month internship in the LEAR team of INRIA during summer 2013

- Jitendra Malik (UC Berkeley) has visited the LEAR team for one month during summer 2013

Summary of 2012

- Zaid Harchaoui and Cordelia Schmid (LEAR-INRIA) visited UC Berkeley for one week in December 2012

- Zaid Harchaoui (LEAR-INRIA) visited Jitendra Malik and Nourredine El Karoui (UC Berkeley) for ten days in September 2012

- Nourredine El Karoui (UC Berkeley) visited the LEAR team of INRIA for two weeks in August 2012

- Zaid Harchaoui (LEAR-INRIA) visited Jitendra Malik and Nourredine El Karoui (UC Berkeley) for two weeks in April 2012

Publications

- Y. Chen, J. Mairal, and Z. Harchaoui. Fast and robust archetypal analysis for representation learning. In CVPR Computer Vision and Pattern Recognition, 2014.

- H. O. Song, R. Girshick, S. Jegelka, J. Mairal, Z. Harchaoui, T. Darrell, et al. On learning to localize objects with minimal supervision. In ICML-31st International Conference on Machine Learning, volume 32, 2014.

- P. Weinzaepfel, G. Gkioxari, Z. Harchaoui, C. Schmid, and J. Malik. Action localization with sequences of movemes. submitted, 2013.

- J. Donahue, Y. Jia, O. Vinyals, J. Hoffman, N. Zhang, E. Tzeng, and T. Darrell. Decaf: A deep convolutional activation feature for generic visual recognition. ICML International Conference in Machine Learning, 2014.

- G. Gkioxari, B. Hariharan, R. Girshick, and J. Malik. R-CNNs for pose estimation and action detection. In CVPR, 2014.

- G. Gkioxari, B. Hariharan, R. Girshick, and J. Malik. Using k-poselets for detecting people and localizing their keypoints. In CVPR, 2014.

- S. Gupta, R. Girshick, P. Arbelaez, and J. Malik. Learning rich features from RGB-D images for object detection and segmentation. ECCV 2014.

- Z. Harchaoui, M. Douze, M. Paulin, M. Dudik, and J. Malick. Large-scale image classi- fication with trace-norm regularization. In CVPR’12 - IEEE Conference on Computer Vision and Pattern Recognition, Providence, United States, June 2012. IEEE.

- D. Oneata, J. Revaud, J. Verbeek, and C. Schmid. Spatio-Temporal Object Detection Proposals. In ECCV 2014 - European Conference on Computer Vision, Zurich, Switzerland, Sept. 2014. Springer.

- M. Paulin, J. Revaud, Z. Harchaoui, F. Perronnin, and C. Schmid. Transformation Pursuit for Image Classification. In CVPR 2014 - IEEE Conference on Computer Vision and Pattern Recognition, Columbus, United States, June 2014. IEEE.

- P. Weinzaepfel, J. Revaud, Z. Harchaoui, and C. Schmid. DeepFlow: Large displacement optical flow with deep matching. In IEEE Intenational Conference on Computer Vision (ICCV), Sydney, Australia, Dec. 2013.

Context

A recent trend in computer vision as well as in other fields is the advent of "big data", i.e. data which is inherently high-dimensional and challenging in many respects. Indeed, the field is witnessing an increasing number of large annotated image and video datasets now becoming available to researchers, along with an increasing number of benchmarks and challenges associated to these datasets. Such large datasets allow to expand the range of possibilities for tackling the major problems of image interpretation and scene understanding using large-scale learning techniques. However, designing principled and scalable statistical learning approaches from such big datasets is actually challenging. Furthermore, these datasets also bring to light not only major statistical and computational challenges but also core computer vision issues.

Objectives

The scientific objective of the HYPERION team is to take up these challenges and propose new principled large-scale statistical learning approaches for image interpretation and learning models for video understanding. The LEAR project-team, Pr Jitendra Malik's team, and Pr Nourredine El Karoui's team have done important work that we aim at leveraging to address these issues.

The LEAR project-team will bring its expertise in large-scale image retrieval, feature building for human action recognition, and large-scale statistical learning for visual recognition.

J. Malik's team will advance the project by bringing in its mastery of poselet-based approaches for classification into attributes, human action recognition, and high-level visual features design.

N. El Karoui's team will contribute with its sound knowledge in kernel random matrix theory, high-dimensional statistical analysis of linear classifiers, and randomized algorithms for large-scale eigenvalue problems.

A goal of this project is the convergence between large-scale learning algorithms, high-level feature design for computer vision, and high-dimensional statistical learning theory, that is the main strengths of each team.