Color in Computer Vision

Color Naming

Color names are linguistic labels that humans attach to colors. We use them routinely and seemingly without effort to

describe the world around us. They have been primarily studied in the fields of visual psychology,

anthropology and linguistics. Within a computer vision context color naming is the action of assigning linguistic color labels to image pixels. We have investigated the possibility to automaticly learn color names from Google Image search.

Color names are linguistic labels that humans attach to colors. We use them routinely and seemingly without effort to

describe the world around us. They have been primarily studied in the fields of visual psychology,

anthropology and linguistics. Within a computer vision context color naming is the action of assigning linguistic color labels to image pixels. We have investigated the possibility to automaticly learn color names from Google Image search.

Publications on this subject:

-

1. J. van de Weijer, C. Schmid, J.J. Verbeek,

Learning Color Names from Real-World Images

, Proc. CVPR 2007, Minneapolis, Minnesota, USA, 2007.

2. J. van de Weijer, C. Schmid, J. Verbeek, D. Larlus Learning Color Names for Real-World Applications , IEEE Transactions in Image Processing, 2009.

Color Feature Extraction

Although color is commonly experienced as an indispensable quality in describing the world around us,

state-of-the art local feature-based representations are mostly based on shape description,

and ignore color information. The description of color is hampered by the large

amount of variations which causes the measured color values to vary significantly.

A change in illuminant color, viewpoint, and acquisition material, all influence the color values of the scene. We

have investigated extending local shape description with color descriptors which are robust with respect to photometric varations.

Although color is commonly experienced as an indispensable quality in describing the world around us,

state-of-the art local feature-based representations are mostly based on shape description,

and ignore color information. The description of color is hampered by the large

amount of variations which causes the measured color values to vary significantly.

A change in illuminant color, viewpoint, and acquisition material, all influence the color values of the scene. We

have investigated extending local shape description with color descriptors which are robust with respect to photometric varations.

Publications on this subject:

-

1. J. van de Weijer, C. Schmid,

Applying Color Names to Image Description

, Proc. ICIP2007, San Antonio, USA, 2007.

2. J. van de Weijer, C. Schmid, Blur Robust and Color Constant Image Description , Proc. ICIP2006, Atlanta, USA, 2006.

3. J. van de Weijer, C. Schmid, Coloring Local Feature Extraction , Proc. ECCV2006, Part II, 334-348, Graz, Austria, 2006.

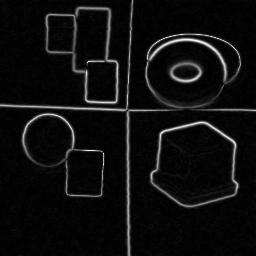

Color Feature Detection

Most of my thesis research was on the subject of color feature detection and photometric invariant feature detection. A brief description and some matlab code can be found here.

Most of my thesis research was on the subject of color feature detection and photometric invariant feature detection. A brief description and some matlab code can be found here.

Publications on this subject:

-

1. J. van de Weijer, Th. Gevers and A.W.M. Smeulders,

Robust Photometric Invariant Features from the Color Tensor,

IEEE Trans. Image Processing, vol. 15 (1), January 2006.

2. J. van de Weijer, Th. Gevers and A.D. Bagdanov, Boosting Color Saliency in Image Feature Detection IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 28 (1), January 2006: 150-156.

3. J. van de Weijer, Th. Gevers and J.M. Geusebroek, Edge and Corner Detection by Photometric Quasi-Invariants, IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 27 (4), April 2005.

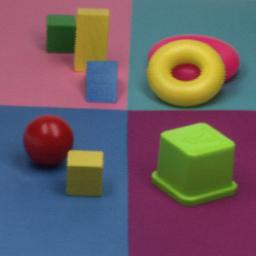

Color Constancy

Color constancy is the ability to measure colors of objects independent of the color of the light source.

The Grey-World assumption, which is at the basis of a well-known color constancy method, assumes that

the average reflectance of surfaces in the world is achromatic. In our research we investigated the possibility of extending this

simple algorithm to the higher order derivative structure of images. We propose

the Grey-Edge hypothesis, which assumes that the average edge difference in a scene is achromatic.

Based on this hypothesis, we derive an algorithm for illuminant color estimation.

The method is easily combined together with Grey-World, max-RGB and Shades of Grey into a single framework for color constancy

based on low level image features (matlab

code is available).

Color constancy is the ability to measure colors of objects independent of the color of the light source.

The Grey-World assumption, which is at the basis of a well-known color constancy method, assumes that

the average reflectance of surfaces in the world is achromatic. In our research we investigated the possibility of extending this

simple algorithm to the higher order derivative structure of images. We propose

the Grey-Edge hypothesis, which assumes that the average edge difference in a scene is achromatic.

Based on this hypothesis, we derive an algorithm for illuminant color estimation.

The method is easily combined together with Grey-World, max-RGB and Shades of Grey into a single framework for color constancy

based on low level image features (matlab

code is available).

Publications on this subject:

-

1. J. van de Weijer, Th. Gevers, A. Gijsenij,

Edge-Based Color Constancy,

IEEE Trans. Image Processing, accepted 2007.

This higher order color constancy theory is further developed in the recent work of Arjan Gijsenij .

Most color constancy methods apply a bottom-up approach. Based on some image statistic an estimation of the illuminant color is computed. In recent work we have investigated the use of

using high-level visual information for color constancy.

We evaluate a number of illuminant color hypotheses on the likelihood

of its semantic content: is the grass green, the road grey, and the sky blue, in correspondence with

our prior knowledge of the world. Based on this semantic likelihood we pick the illuminant which results in the most likely image.

We use two approaches to obtain the illuminant hypotheses, one of which is the use of existing color constancy methods, such as Grey-World, and Max-RGB. Furthermore, we propose to cast

top-down color constancy hypotheses, based on a semantic understanding of the image, and prior knowledge of the colors of the recognized classes.

-

2. J. van de Weijer, C. Schmid, J.J. Verbeek,

Using High-Level Visual Information for Color Constancy , Proc. ICCV, Rio de Janeiro, Bresil, 2007.