Action recognition with feature trajectories

In general, feature detectors for action recognition are based on spatio-temporal saliency criteria to detect interesting 3D positions in video. As a more intuitive approach to videos, we propose in this work a local feature representation for video sequences based on feature trajectories. In contrast to spatio-temporal interest points, feature trajectories allow for a more adapted representation and are able to benefit from the rich motion information captured by the trajectories.

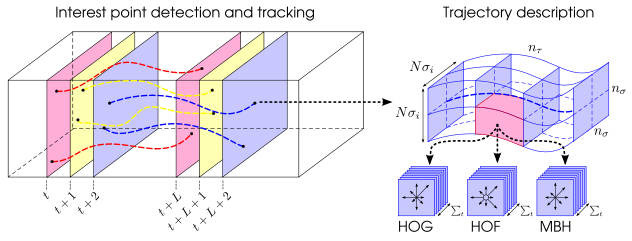

This is on-going work in collaboration with Heng Wang. Below the results that we are currently able to obtain. Our descriptor based on feature trajectories combines four different descriptors: one for the shape of the trajectory, another one based on Histograms of Oriented Gradients (HOG), Histograms of Optical Flow (HOF), and Motion Boundary Histograms (MBH).

| KTH | YouTube | Hollywood2 | |

|---|---|---|---|

| Our approach | 94.2% | 79.8 | 52.5 |

| Harris3D + HOG/HOF [Laptev'08] | 92.0% | 68.7% | 47.3% |

| State-of-the-art | 94.5% [Gilbert'10] |

71.2% [Liu'09] |

50.9% [Gilbert'10] |

For more information, see also:

- My PhD thesis (chapter 5)