Incremental Learning of Object Detectors without Catastrophic Forgetting

Abstract

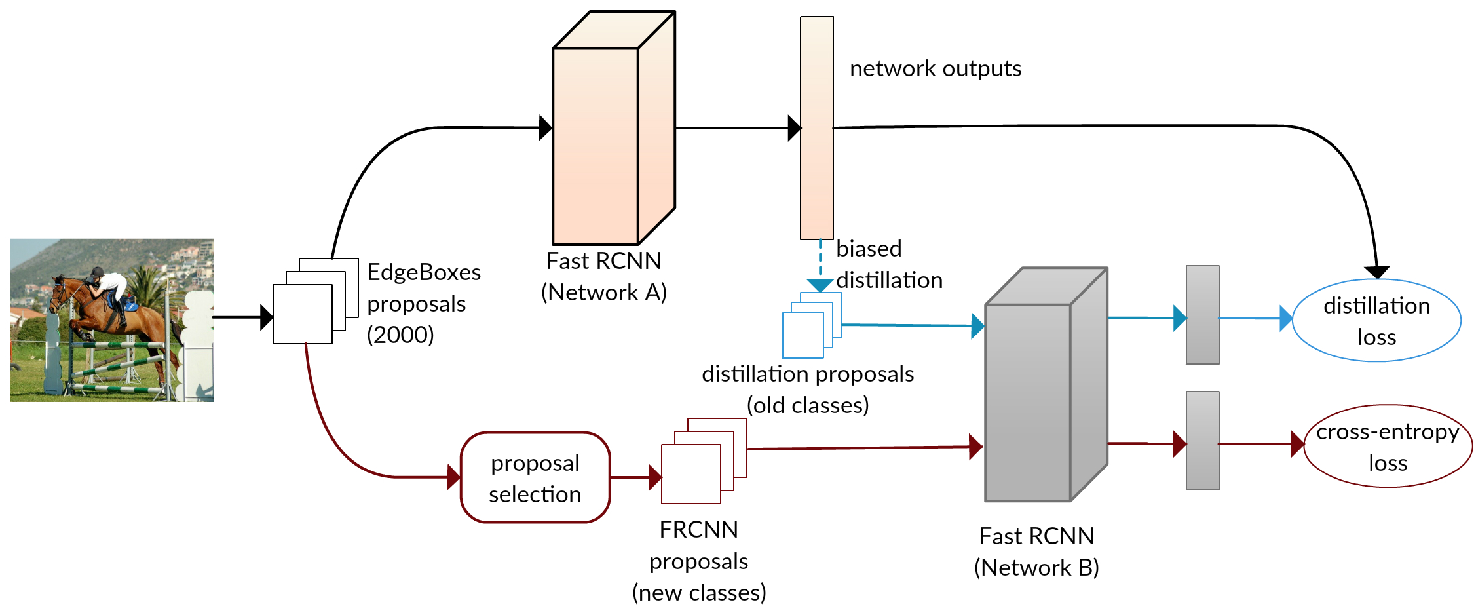

Convolutional neural networks have been highly successful for object detection, but are ill-equipped for the case of incremental learning, i.e., adapting the original model trained on a set of classes to additionally detect objects of a new class, in the absence of the initial training data. They suffer from "catastrophic forgetting" - an abrupt degradation of performance on the original set of classes, when the training objective is adapted to the new classes. We present a method to address this issue, and learn object detectors incrementally, when neither the original training data nor annotations for the original classes in the new training set are available. The core of our proposed solution is a loss function to balance the interplay between predictions on the new classes and a new distillation loss which minimizes the discrepancy between responses for old classes from the original and the updated networks. This incremental learning can be performed multiple times, for a new set of classes in each step, with a negligible drop in performance compared to the baseline network trained on the ensemble of data. We present object detection results on the PASCAL VOC 2007 and COCO datasets, along with a detailed empirical analysis of the approach.

Paper

BibTeX

@inproceedings{shmelkov17,

AUTHOR = {Shmelkov, Konstantin and Schmid, Cordelia and Alahari, Karteek},

TITLE = {Incremental Learning of Object Detectors without Catastrophic Forgetting},

BOOKTITLE = {{IEEE International Conference on Computer Vision (ICCV)}},

YEAR = {2017},

}

Acknowledgements

This work was supported in part by the ERC advanced grant ALLEGRO, a Google research award, and gifts from Facebook and Intel. We gratefully acknowledge NVIDIA's support with the donation of GPUs used for this work.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright. This page style is taken from Guillaume Seguin.