Diversity with Cooperation: Ensemble Methods for Few-Shot Classification

Abstract

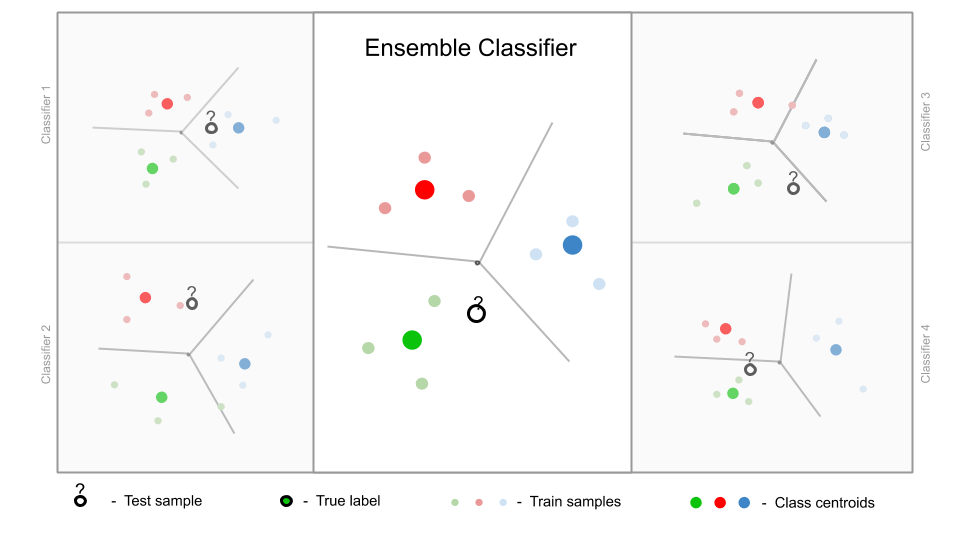

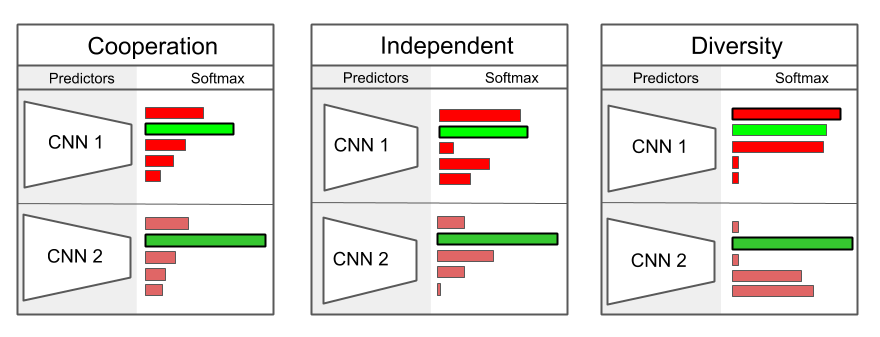

Few-shot classification consists of learning a predictive model that is able to effectively adapt to a new class, given only a few annotated samples. To solve this challenging problem, meta-learning has become a popular paradigm that advocates the ability to ``learn to adapt''. Recent works have shown, however, that simple learning strategies without meta-learning could be competitive. In this paper, we go a step further and show that by addressing the fundamental high-variance issue of few-shot learning classifiers, it is possible to significantly outperform current meta-learning techniques. Our approach consists of designing an ensemble of deep networks to leverage the variance of the classifiers, and introducing new strategies to encourage the networks to cooperate, while encouraging prediction diversity. Evaluation is conducted on the mini-ImageNet and CUB datasets, where we show that even a single network obtained by distillation yields state-of-the-art results.Different ways to train ensembles

Paper

BibTeX

@inproceedings{dvornik2019diversity,

title={Diversity with Cooperation: Ensemble Methods for Few-Shot Classification},

author={Dvornik, Nikita and Schmid, Cordelia and Mairal, Julien},

booktitle={{IEEE International Conference on Computer Vision (ICCV)}},

year={2019}

}

Code

The code is available in the official GitHub repo.

Acknowledgements

This work was supported by a grant from ANR (MACARON project under grant number ANR-14-CE23-0003-01), by the ERC grant number 714381 (SOLARIS project), the ERC advanced grant ALLEGRO and gifts from Amazon and Intel.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright. This page style is taken from Guillaume Seguin.